About Me

I'm Deenadayalan Dasarathan, a recent graduate (class of 2023) with a Master of Data Science from Northeastern University, Khoury College of Computer Science. Currently, I am actively seeking full-time roles as a Cloud Engineer, Data Engineer, DevOps Engineer, or MlOps Engineer

During my time at Northeastern, I worked as a Graduate Research Assistant, where I did significant contribution to the research community. I have an extensive professional IT experience, with that I have developed a strong foundation in various roles, including Assistant Data Scientist at Northeastern University, Data Engineer, DevOps Engineer, and Java Developer at Tata Consultancy Services.

- Technical skills: Python, Machine Learning/AI (Pandas, NumPy, Matplotlib,Seaborn, Scikit-Learn, PyTorch), DevOps (ELK, Jenkins, Docker, Grafana), SQL (MySQL, PostgreSQL, NoSQL), Hadoop, MapReduce, Apache Spark, Scala, AWS, REST API, Java, Go

- Management skills: Agile methodologies, Process Improvement, Scrum, Planning & Execution

- My interests: Serverless architecture, Cloud computing, IaC, ETL workflows, Automation, Monitoring and Alarming systems, Creating Dashboards and Log Analytics.

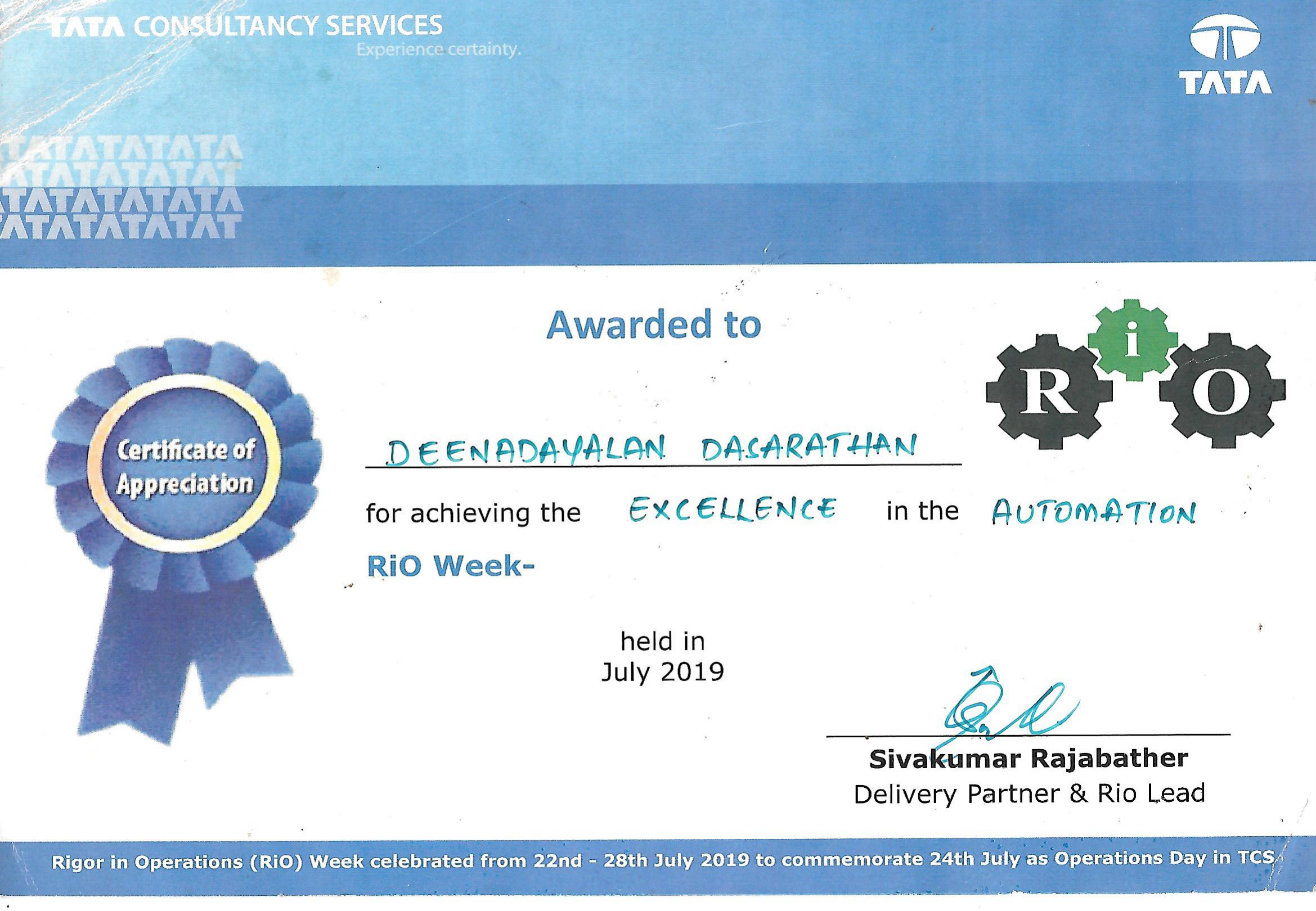

I am a strong advocate of continuous learning and skill development. I have demonstrated my commitment by earning AWS certifications, showcasing my expertise in cloud computing. Furthermore, I have received appreciations for my work, validating the impact and value I bring to projects and teams.

As a dedicated IT professional, I am driven to contribute my expertise and make a positive impact in the field. I am excited to explore opportunities where I can apply my knowledge and skills to drive innovative solutions and contribute to the success of an organization.

Certifications

Achievements

Projects

Pulsar Identification & Clustering

The project involves working with the HTRU2 dataset, which consists of pulsar candidates collected during the High Time Resolution Universe Survey (South). Each data point in the dataset is labeled as either 0 (not a pulsar) or 1 (pulsar). The main objective of the project is to classify the given data points as either pulsars or interference. With the best model, the project achieved an impressive accuracy of 0.9490 and an F1 score of 0.9475, demonstrating the effectiveness of the classification task in distinguishing pulsars from interference. Additionally, the project aims to cluster the pulsars to visualize the underlying relationships between the data points.

Fake Review Detection

This project focuses on developing a fake review detector using NLP techniques. It involves preprocessing the data through text cleaning and feature engineering. Various supervised learning algorithms, including Logistic Regression, Multinomial Naïve Bayes, AdaBoost Classifier, Random Forest Classifier, and Passive Aggressive Classifier, are employed. The results indicate that models trained on lemmatized text data outperform those trained on stemmed data. Among the models, Logistic Regression achieves the best performance with an F1 score of 0.8757 and an accuracy of 0.8722. The project underscores the efficacy of NLP techniques in detecting fake reviews and suggests avenues for further enhancements in the field.

Resource Wait-time Prediction in HPC system

The project aims to estimate the wait time for job execution on a High Performance Computing (HPC) system using machine learning. The job scheduler in the HPC system does not provide information on queue time and requested resources. By analyzing job history, a ML model is built to predict the queue time based on the requested resources. This estimation helps users know the wait time before submitting a job to the HPC system. The project involves data extraction from the SLURM DB, data preprocessing and training of regression models. The trained XGB model achieves good performance with R2 score of 0.88 and mean absolute error of 35 minutes.

CPUBoundBoT

CPUBoundBoT is a monitoring and alerting system developed in Go for HPC login nodes that tracks CPU utilization using cgroups. It collects successive CPU usage readings and compares them to a threshold limit. If a process exceeds the threshold, the user is notified via email with process details. Simultaneously, a text alert is sent to the System Admin Teams channel for immediate action. CPUBoundBoT ensures efficient resource management and helps prevent performance issues caused by high CPU usage on the login nodes.

Serverless CRUD Architecture

The user registration web application is designed using a Serverless architecture, ensuring high availability and scalability. AWS S3 is used for static web hosting, providing a reliable platform. The architecture incorporates various AWS services such as S3, Lambda, API Gateway, and DynamoDB. The user registration data is stored in a NoSQL database, DynamoDB, enabling efficient CRUD (Create, Read, Update, Delete) operations. This Serverless setup allows for easy management, cost optimization, and seamless scalability of the application.

Page Rank - Big Data

The project aims to implement the PageRank algorithm using MapReduce and Spark Scala for determining web page importance based on link structure. Large-scale web graphs are processed with iterative computations to calculate PageRank scores. The project utilizes AWS EMR cluster for efficient distributed processing and running MapReduce and Spark Scala programs. The results indicate that Spark outperforms MapReduce, showcasing faster execution times. This highlights the advantages of Spark Scala in handling Big-Data.

Hire Me

If you are interested in working together, feel free to contact me.

Contact Details:

- Email: dasarathan.d@northeastern.edu

- LinkedIn: https://www.linkedin.com/in/deenadayalan-d/

_Award_1.png)

_Award_2.png)